LMOD+ extends our preliminary LMOD with three major enhancements: (1) nearly 50% dataset expansion; (2) broadened task coverage including binary disease diagnosis, multi-class diagnosis, severity classification; and (3) systematic evaluation of 24 state-of-the-art MLLMs.

Research

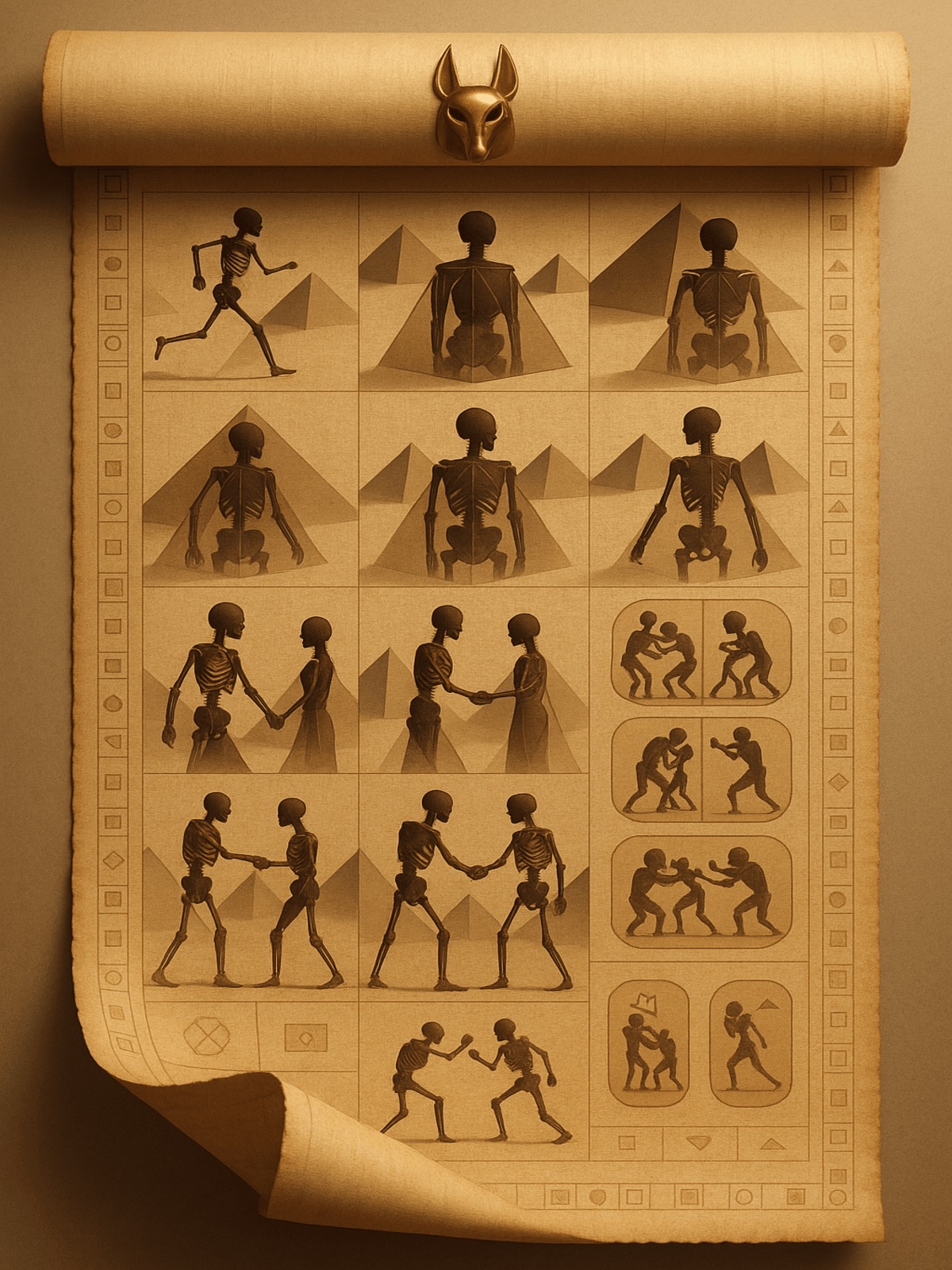

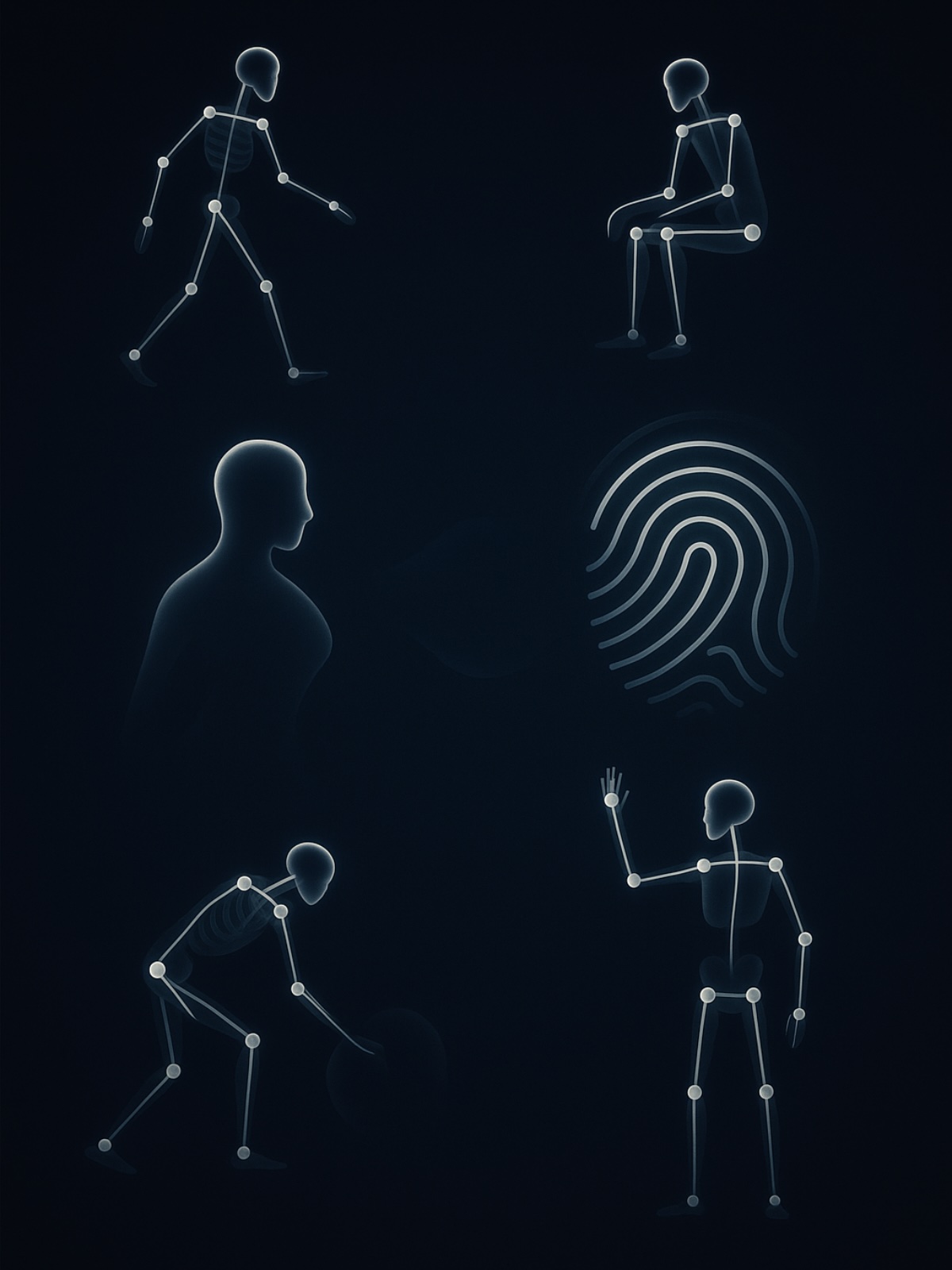

This paper presents a representation-centric survey of skeleton-based action recognition (joints, bones, motion, derived features), introduces the ANUBIS dataset: a 102-class, multi-view (including back-view), multi-person dataset, and benchmarks existing methods.

This survey systematizes plane geometry problem solving under an encoder-decoder framework, categorizes encoders/decoders and output formats across PGPS benchmarks, and highlights key challenges (encoder-stage hallucinations, benchmark data leakage) with directions for future research.

GeoDANO is a plane geometry VLM that pairs a CLIP-trained, few-shot domain-adapted vision encoder (GeoCLIP), pretrained on synthetic diagram and caption pairs, with an LLM to robustly extract visual premises including OCR across styles, outperforming specialized and generalist baselines on MathVerse.

This study proposes a systematic data and evaluation pipeline that repurposes existing datasets to curate a dataset for the development and evaluation of largevision-language models in ophthalmology.

HandCraft is a plug-and-play framework that detects malformed hands in diffusion-generated images and restores them by aligning a parametric hand template (mask + depth) to condition ControlNet, requiring no model retraining

This paper introduces Set-of-Vision (SoV) prompting, which enhances emotion recognition in Vision Large Language Models by using spatial visual cues like bounding boxes, numbers, and facial landmarks to precisely identify and analyze facial expressions while preserving image context.

Position-Sensing Graph Neural Networks (PSGNNs) learn to automatically select optimal anchor nodes in graphs through backpropagation rather than random selection, enabling better position-awareness.

This paper shows skeleton datasets leak sensitive attributes (≈87% gender, ≈80% re-ID) and proposes an adversarial anonymization framework that suppresses private cues while largely preserving action recognition accuracy.

Treating denoising as distribution disentanglement, the paper introduces FDN: an invertible normalizing-flow network that learns noisy-image distributions and masks noise latents to reconstruct clean images, achieving state-of-the-art AWGN/SIDD results efficiently.

The paper introduces two plug-and-play temporal modules: Discrete Cosine Encoding (injecting frequency-aware, noise-robust features) and Chronological Loss (enforcing frame order) that consistently improve diverse skeleton-based action recognizers.